by Timon Meyer, Fraunhofer-Institut für Nachrichtentechnik, Heinrich-Hertz-Institut, HHI

In a new paper published in Nature Geoscience, experts from Fraunhofer Heinrich-Hertz-Institut (HHI) advocate for the use of explainable artificial intelligence (XAI) methods in geoscience.

The researchers aim to facilitate the broader adoption of AI in geoscience (e.g., in weather forecasting) by revealing the decision processes of AI models and fostering trust in their results. Fraunhofer HHI, a world-leader in XAI research, coordinates a UN-backed global initiative that is laying the groundwork for international standards in the use of AI for disaster management.

AI offers unparalleled opportunities for analyzing data and solving complex and nonlinear problems in geoscience. However, as the complexity of an AI model increases, its interpretability may decrease. In safety-critical situations, such as disasters, the lack of understanding of how a model works—and the resulting lack of trust in its results—can hinder its implementation.

XAI methods address this challenge by providing insights into AI systems, identifying data- or model-related issues. For instance, XAI can detect “false” correlations in training data—correlations irrelevant to the AI system’s specific task that may distort results.

“Trust is crucial to the adoption of AI. XAI acts as a magnifying lens, enabling researchers, policymakers, and security specialists to analyze data through the ‘eyes’ of the model so that dominant prediction strategies—and any undesired behaviors—can be understood,” explains Prof. Wojciech Samek, Head of Artificial Intelligence at Fraunhofer HHI.

The paper’s authors analyzed 2.3 million arXiv abstracts of geoscience-related articles published between 2007 and 2022. They found that only 6.1% of papers referenced XAI. Considering its immense potential, the authors sought to identify challenges preventing geoscientists from adopting XAI methods.

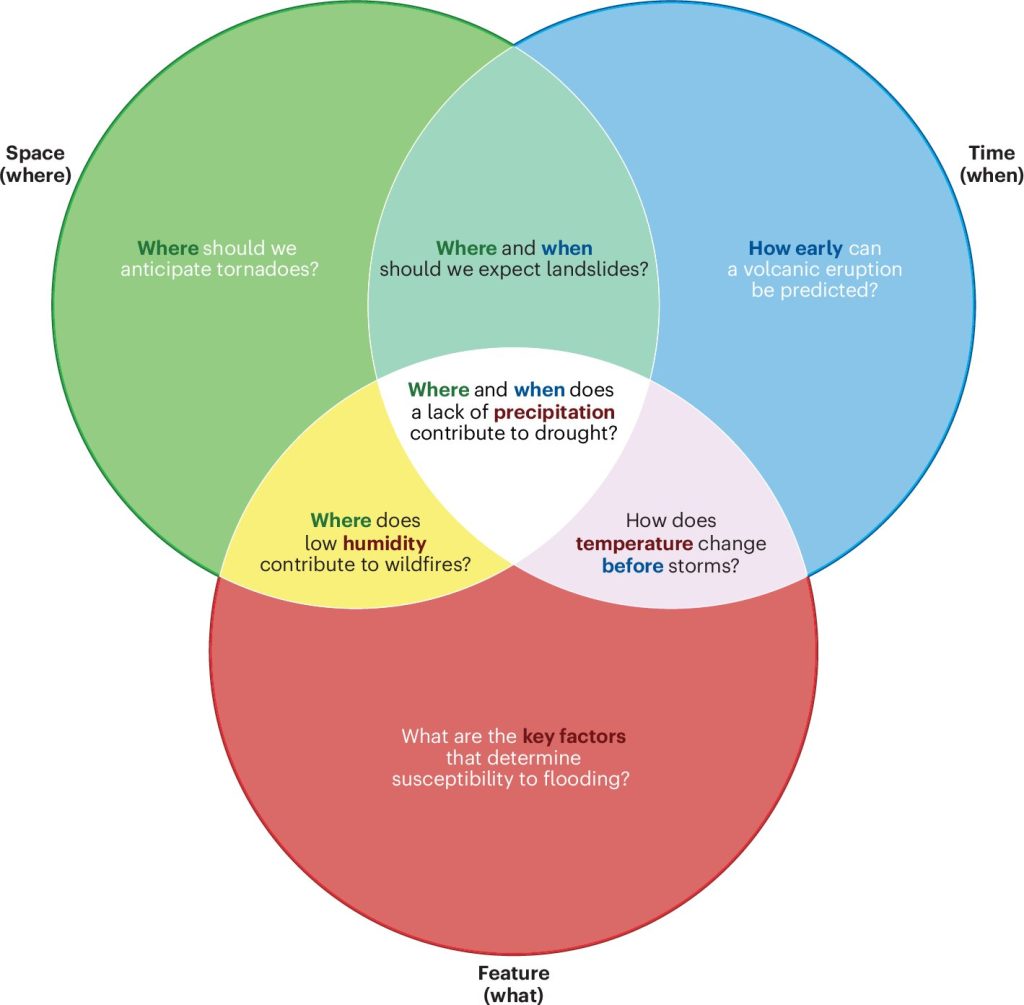

Focusing on natural hazards, the authors examined use cases curated by the International Telecommunication Union/World Meteorological Organization/UN Environment Focus Group on AI for Natural Disaster Management. After surveying researchers involved in these use cases, the authors identified key motivations and hurdles.

Motivations included building trust in AI applications, gaining insights from data, and improving AI systems’ efficiency. Most participants also used XAI to analyze their models’ underlying processes. Conversely, those not using XAI cited the effort, time, and resources required as barriers.

“XAI has a clear added value for the geosciences—improving underlying datasets and AI models, identifying physical relationships that are captured by data, and building trust among end users—I hope that once geoscientists understand this value, it will become part of their AI pipeline,” says Dr. Monique Kuglitsch, Innovation Manager at Fraunhofer HHI and Chair of the Global Initiative on Resilience to Natural Hazards Through AI Solutions.

To support XAI adoption in geoscience, the paper provides four actionable recommendations:

- Fostering demand from stakeholders and end users for explainable models.

- Building educational resources for XAI users, covering how different methods function, explanations they can provide, and their limitations.

- Building international partnerships to bring together geoscience and AI experts and promote knowledge sharing.

- Supporting integration with streamlined workflows for standardization and interoperability of AI in natural hazards and other geoscience domains.

In addition to Fraunhofer HHI experts Monique Kuglitsch, Ximeng Cheng, Jackie Ma, and Wojciech Samek, the paper was authored by Jesper Dramsch, Miguel-Ángel Fernández-Torres, Andrea Toreti, Rustem Arif Albayrak, Lorenzo Nava, Saman Ghaffarian, Rudy Venguswamy, Anirudh Koul, Raghavan Muthuregunathan, and Arthur Hrast Essenfelder.

More information:

Jesper Sören Dramsch et al, Explainability can foster trust in artificial intelligence in geoscience, Nature Geoscience (2025). DOI: 10.1038/s41561-025-01639-x

Provided by

Fraunhofer-Institut für Nachrichtentechnik, Heinrich-Hertz-Institut, HHI

Citation:

Experts underscore the value of explainable AI in geosciences (2025, February 5)

retrieved 5 February 2025

from https://phys.org/news/2025-02-experts-underscore-ai-geosciences.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.