There’s a steep cost to reasoning AI, or AI that can provide a step-by-step breakdown of its thought process along with a response.

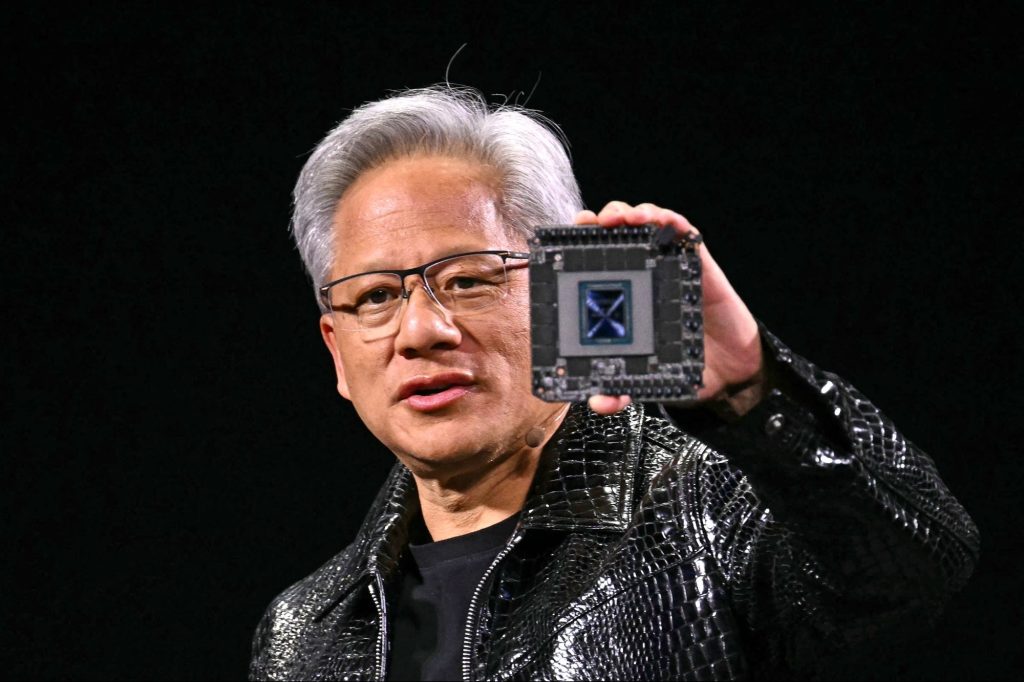

Nvidia CEO Jensen Huang told CNBC on Wednesday that next-generation AI models capable of reasoning through problems before arriving at an answer, such as ChatGPT 4o and Grok 3, require “100 times more” computing power, or as much as 100 times the number of Nvidia’s AI chips than older AI models.

This results in increased demand and higher sales for Nvidia’s AI chips, which sell for up to $70,000 each, to support those reasoning capabilities. Nvidia holds between 70% to 95% of the market for AI chips.

“We’re just at the beginning of the reasoning AI era,” Huang told CNBC following the AI chipmaker’s fourth-quarter earnings report. “All of these reasoning AI models now need a lot more compute than what we’re used to.”

In the interview, Huang touted high demand for Nvidia’s Blackwell AI chip, which had revenues of $11 billion in the fourth quarter, the first quarter it was out. Nvidia’s data center revenues, which power AI systems for companies like Meta, Microsoft, and Amazon, almost doubled from last year to reach $35.6 billion — and Huang says the growth is set to continue.

“This year’s capital investment for data centers is so much greater than last year’s,” Huang told CNBC.

Meta and Microsoft, which are some of Nvidia’s biggest clients, have already committed billions of dollars this year to spending on AI infrastructure like data centers. Meta stated it would spend up to $65 billion on AI while Microsoft said it would spend $80 billion.

Nvidia CEO Jensen Huang. Photo by Patrick T. Fallon / AFP via Getty Images

Nvidia CEO Jensen Huang. Photo by Patrick T. Fallon / AFP via Getty Images

Nvidia beat Wall Street estimates on Wednesday with its latest earnings report. The AI giant reported that quarterly revenue increased 78% to $39.33 billion compared to a year earlier. Revenue was higher than Wall Street estimates of $38.05 billion.

In the earnings call, Nvidia’s chief financial officer Colette Kress reiterated Huang’s message about the increased computing power, and corresponding Nvidia AI chips, required to fuel reasoning AI.

“Long-thinking, reasoning AI can require 100 times more compute per task compared to one-shot inferences,” Kress told investors.

Nvidia is the second biggest company in the world, with a market cap of over $3.1 trillion at the time of writing.

Related: ‘Everybody Wants to Be First’: Nvidia CEO Says Demand for Its Blackwell AI Chip Is ‘Insane’